In this article we’ll see how to perform Brain tumor segmentation from MRI images. We’ll try different architectures which are popular for image segmentation problems.

Let’s start by defining what our business problem is.

Business Problem:

A brain tumor is an abnormal mass of tissue in which cells grow and multiply abruptly, which remains unchecked by the mechanisms that control normal cells.

Brain tumors are classified into benign tumors or low grade (grade I or II ) and malignant or high grade (grade III and IV).Benign tumors are non-cancerous and are considered to be non-progressive, their growth is relatively slow and limited.

However, malignant tumors are cancerous and grow rapidly with undefined boundaries. So, early detection of brain tumors is very crucial for proper treatment and saving of human life.

Problem Statement:

The problem we are trying to solve is image segmentation. Image segmentation is the process of assigning a class label (such as person, car, or tree) to each pixel of an image.

You can think of it as classification, but on a pixel level-instead of classifying the entire image under one label, we’ll classify each pixel separately.

Dataset:

The dataset is downloaded from Kaggle.

This dataset contains brain MRI images together with manual FLAIR abnormality segmentation masks.

The images were obtained from The Cancer Imaging Archive (TCIA). They correspond to 110 patients included in The Cancer Genome Atlas (TCGA) lower-grade glioma collection with at least fluid-attenuated inversion recovery (FLAIR) sequence and genomic cluster data available.

Tumor genomic clusters and patient data is provided in data.csv file. The images are in tif format

Exploratory Analysis and Pre-Processing:

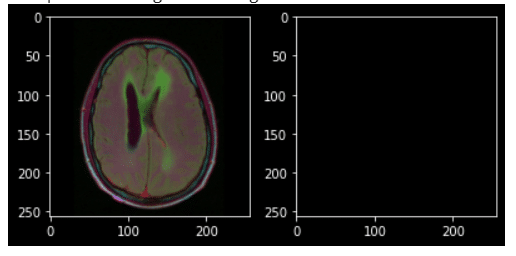

The following is a sample image and its corresponding mask from our data set.

Let’s print a brain image which has tumor along with its mask.

Now Let’s check the distribution of tumorous and non-tumor images in the data set.

Here 1 indicates tumor and 0 indicates no tumor. We have a total of 2556 non-tumorous and 1373 tumorous images.

As a pre-processing step we’ll crop the part of the image which contains only the brain. Before cropping the image we have to deal with one major problem that is low contrast.

A common problem with MRI images is that they often suffer from low contrast. So, enhancing the contrast of the image will greatly improve the performance of the models.

For instance, take a look at the following image from our data set.

With the naked eye we cannot see anything. It’s completely black. Let’s try enhancing the contrast of this image.

There are two common ways to enhance the contrast.

- Histogram Equalization and

- Contrast Limited Adaptive Histogram Equalization(CLAHE)

First we’ll try Histogram Equalization. We can use OpenCV’s equalizeHist()

|

|

#since this is a colour image we have to apply #the histogram equalization on each of the three channels separately #cv2.split will return the three channels in the order B, G, R b,g,r = cv2.split(img)

#apply hist equ on the three channels separately b = cv2.equalizeHist(b) g = cv2.equalizeHist(g) r = cv2.equalizeHist(r)

#merge all the three channels equ = cv2.merge((b,g,r))

#convert it to RGB to visualize equ = cv2.cvtColor(equ,cv2.COLOR_BGR2RGB); plt.imshow(equ) |

The following is the histogram equalized image.

Now let’s apply CLAHE. We’ll use OpenCV’s createCLAHE()

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19

|

#do the same as we did for histogram equalization #set the clip value and the gridsize changing these values will give different output clahe = cv2.createCLAHE(clipLimit=6, \ tileGridSize=(16,16))

#split the three channels b,g,r = cv2.split(img)

#apply CLAHE on the three channels separately b = clahe.apply(b) g = clahe.apply(g) r = clahe.apply(r)

#merge the three channels bgr = cv2.merge((b,g,r))

#convert to RGB and plot cl = cv2.cvtColor(bgr,cv2.COLOR_BGR2RGB); cv2_imshow(cl) |

The following is the image after applying CLAHE

From the results of both the histogram equalization and CLAHE we can conclude that CLAHE produce better result. The image which we got from histogram equalizer looks unnatural compared to CLAHE.

Now we can proceed to crop the image.

The following is the procedurce we’ll follow to crop a image.

1) First we’ll load the image.

2) Then we’ll apply CLAHE to enhance the contrast of the image.

3) Once the contrast is enhanced we’ll detect edges in the image.

4) Then we’ll apply the dilate operation so as to remove small regions of noises.

5) Now we can find the contours in the image. Once we have the contours we’ll find the extreme points in the contour and we will crop the image.

The above image depicts the process of contrast enhancing and cropping for a single image. Similarly we’ll do this for all the images in the data set.

The following code will perform the pre-processing step and save the cropped images and its masks.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55

|

import cv2 from google.colab.patches import cv2_imshow from tqdm import tqdm def crop_img(): #loop through all the images and its corresponding mask for i in tqdm(range(len(df))): image = cv2.imread(df[‘train’].iloc[i])

mask = cv2.imread(df[‘mask’].iloc[i])

clahe = cv2.createCLAHE(clipLimit=4, \ tileGridSize=(16,16)) b,g,r = cv2.split(image)

#apply CLAHE on the three channels separately b = clahe.apply(b) g = clahe.apply(g) r = clahe.apply(r)

#merge the three channels bgr = cv2.merge((b,g,r))

#convert to RGB cl = cv2.cvtColor(bgr,cv2.COLOR_BGR2RGB)

imgc = cl.copy()

#detect edges edged = cv2.Canny(cl, 10, 250) #perform dilation edged = cv2.dilate(edged, None, iterations=2)

#finding_contours

(cnts, _) = cv2.findContours(closed.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

if len(cnts) == 0: #If there are no contours save the CLAHE enhanced image cv2.imwrite(‘/content/train/’+df[‘train’].iloc[i][–28:], imgc) cv2.imwrite(‘/content/mask/’+df[‘mask’].iloc[i][–33:], mask) else: #find the extreme points in the contour and crop the image #https://www.pyimagesearch.com/2016/04/11/finding-extreme-points-in-contours-with-opencv/ c = max(cnts, key=cv2.contourArea) extLeft = tuple(c[c[:, :, 0].argmin()][0]) extRight = tuple(c[c[:, :, 0].argmax()][0]) extTop = tuple(c[c[:, :, 1].argmin()][0]) extBot = tuple(c[c[:, :, 1].argmax()][0]) new_image = imgc[extTop[1]:extBot[1], extLeft[0]:extRight[0]] mask = mask[extTop[1]:extBot[1], extLeft[0]:extRight[0]]

#save the image and its corresponding mask cv2.imwrite(‘/content/train/’+df[‘train’].iloc[i][–28:], new_image) cv2.imwrite(‘/content/mask/’+df[‘mask’].iloc[i][–33:], mask) crop_img() |

Evaluation Metrics:

Before proceeding to the modelling part we need to define our evaluation metrics.

The most popular metrics for image segmentation problems are Dice coefficient and Intersection Over Union(IOU).

IOU:

IoU is the area of overlap between the predicted segmentation and the ground truth divided by the area of union between the predicted segmentation and the ground truth.

IOU = \frac{\mathrm{TP}}{\mathrm{TP}+\mathrm{FN}+\mathrm{FP}}

Dice Coefficient:

The Dice Coefficient is 2 * the Area of Overlap divided by the total number of pixels in both images.

Dice Coefficient = \frac{2 T P}{2 T P+F N+F P}

1 – Dice Coefficient will yield us the dice loss. Conversely, people also calculate dice loss as -(dice coefficient). We can choose either one.

However, the range of the dice loss differs based on how we calculate it. If we calculate dice loss as 1-dice_coeff then the range will be [0,1] and if we calculate the loss as -(dice_coeff) then the range will be [-1, 0].

If you want to learn more about IOU and Dice Coefficient you might want to read this excellent article by Ekin Tiu.

Models:

I have totally trained three models. They are

- Fully Convolutional Network(FCN32)

- U-NET and

- ResUNet

The results of the models are below.

As you can see from the above results, the ResUNet model performs best compared to other models.

However, if you take a look at the IOU values it is near 1 which is almost perfect. This could be because the non-tumor area is large when compared to the tumorous one.

So to confirm that the high Test IOU is not because of that let’s calculate the IOU values for the tumor and non-tumour images separately.

We’ll first divide our test data into two separate data sets. One with tumorous images and the other with non-tumorous images.

Once we have divided the data set we can load our ResUnet model and make the predictions and get the scores for the two data sets separately.

The following are the results separately on the tumorous and non-tumorous images.

The numbers looks Okay. So, we can conclude that the score is not high because of the bias towards the non-tumorous images which has relatively large area when compared to tumorous images.

The following are the sample results of the ResUNet model.

The results are looking good. The image on the left is the input image. The middle one is the ground truth and the image which is on the right is our model’s(ResUNet) prediction.

To get the complete code for this article visit this Github Repo.

References:

1) https://www.pyimagesearch.com/

2) https://opencv-python-tutroals.readthedocs.io/en/latest/index.html

3) https://www.kaggle.com/bonhart/brain-mri-data-visualization-unet-fpn

4) https://www.kaggle.com/monkira/brain-mri-segmentation-using-unet-keras