Dr Keith Rowley in his Labour Day speech to the trade unions had this to say:

“It is only a matter of time before the presence of guard rails in the workplace are changed and generative AI, with its enormous capacity for data, pattern recognition and automation capabilities, forces a new mix and style of work… heavy investment in the technological development in AI is not only recalibrating, but also reinventing the world of work, which poses a challenge for the whole society… there is potential for great improvement alongside the possibility of frightening abuse.”

He put the unions on notice that drastic changes might be ahead in their workplaces.

The general understanding is that the presence of AI in the workplace will replace workers in many disciplines, though, as new technologies have done in the past, it may create new jobs. But even at this stage there are concerns about the use of this emerging technology whether it, like IoT (Internet of things), may either degenerate into hype or become integrated into the technologies of our economies. Generative AI chatbots have been trained on the Internet and have inherited many of its unsolved issues, including those that relate to bias, misinformation copyright infringement, fake news, human rights abuse and all-round economic upheaval. Real world data retrieved from the Internet, especially text and images, are inundated with bias from gender stereotypes to racial discrimination. Hence, AI models trained on this data encode these biases and then strengthen them with their use. For example, these AI devices when employed in the US tend to portray engineers as white and male and nurses as white and female: Black people risk being mis-identified by police departments AI facial recognition programs leading to wrongful arrests, hiring algorithms favour men over women, entrenching a bias they were sometimes brought in to address. Without new data sets or a new way to train models the root cause of the problem is here to stay.

Indeed, techniques like learning from human feedback can drive the model output towards the kind of information that the designers wish. Also synthetic data sets for training could be used but critics see these solutions as Band-Aids on poor base models- hiding rather than rectifying the problem. The prediction is that bias will continue as an inherent feature of most AI models and will need work-around by humans.

Copyright issues

Another concern is the impact that AI is having on copyright. In training the AI models little attention is paid to the ownership of the work (IP) being used. Hence class action suits for profiting from this work without the owners’ consent have been launched in the US by writers, composers and artists. For example a lawsuit has been filed in the federal courts in New York and Massachusetts by the Universal Music Corp., Sony Music Entertainment and Warner Music against Suno AI and Uncharted Labs Inc (developer of Udio AI) for using their copyright material to train AI models without their permission.

However, the artists etc are responding with technology in their own interest. One tool called Nightshade allows users to alter their images in ways that are imperceptible to humans viewing the images but utterly destructive to machine learning models, making them fail to accurately classify images during their training, interpreting instead the modified/corrupted images. For example the Nightshade modified image of a dog may be interpreted by the model as that of a cat or whatever.

Job security fears

Further, it is said that AI is coming after our jobs. Chatbots can do well in high school tests, professional medical licensing examinations and bar exams. Still, workers using these devices massively outperform those who are not! Some say that there will be the need for a Universal Basic Income as AI replaces many workers. But, AI is really suited to doing the grunt (physically exhausting, repetitious, boring) work and its use could let workers focus on the more fulfilling parts of their jobs. Thus, these models can be empowering, as opposed to replacing workers overall. Indeed, technology has being doing this for jobs since the industrial revolution- new jobs are created and old ones die out. Still, change is always painful and net gains can mask individual losses. Also, these models can and have been used to provide misinformation. Hence, they can be used to produce more persuasive propaganda–harder to detect as such–at massive scales, especially for political elections. Indeed, there is no way to know a priori all of the ways a technology can be used or misused until it is used. These tools have to be used by many people so that they can be improved and made to serve according to societies’ norms.

Unfortunately we heard from our deceased talented philosopher, Stephen Hawkins, that eventually AI can take over from us humans, a view that entrepreneur Elon Musk supports. To many this is just fear mongering, which will eventually die out, but the influence on current policy makers may be felt for some time yet. Still, there are more immediate AI challenges than this doomsday prediction, which we have to address. Even at this stage of the technological development of AI there are some inherent areas in which it fails to perform acceptably. One of the problems with training an AI model is encountered in its retraining- it forgets its initial training material. The new training material tends to overwrite the past knowledge. One method to overcome this is of course to repeat some of the old training material.

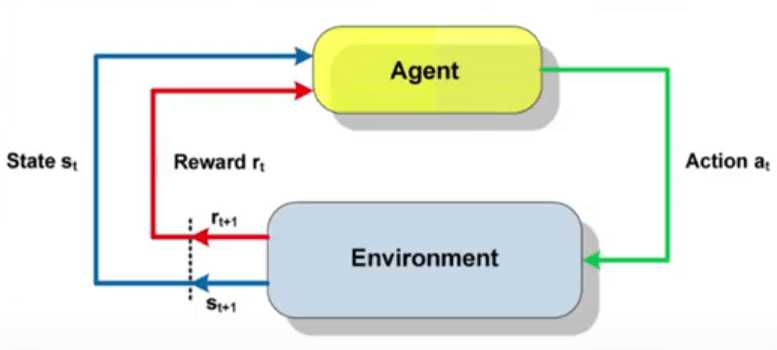

AI models may be asked after being fed some data to make a decision on, for example, whether a person is a criminal or not, or whether a test shows cancer, based on this data. But whatever the answer the way AI makes these decisions is still a mysterious process, making human oversight mandatory. Again AI cannot respond adequately to something it has never seen in its training. For example, when trained it may be able to identify a truck, but if one were involved in a collision and is now laying on its side it will not be identifiable; indeed, an AI autonomous vehicle failed to indentify and subsequently killed a cyclist pushing a bicycle across a highway since it was never trained on such a sight. AI also lacks commonsense. For example the model may be trained to identify say white supremacists by their use of the words black and gay, but may not appreciate that blacks and gays also use these words and may misidentify them by this usage. AI is also bad at mathematics. Indeed, neural networks nowadays can learn to solve nearly every kind of problem given enough resources but not mathematics. This anomaly remains unclear to researchers. (References: IEEE Spectrum and MIT Technology Review Jan/Feb 2024).

In T&T we may lose jobs to AI at the grunt level if our entrepreneurs seek to so improve their companies’ productivity. However, it should be appreciated that this increase in productivity is really obtained by moving basic work to AI so freeing up the staff to concentrate on more productive work. However, it is important for our entrepreneurs and software developers to appreciate the current limitations of the AI technology; bias exists depending on how it was trained, it lacks commonsense when it is interacting with clients, and its decision making must be corroborated. Still, it is yet to be seen whether AI will emerge as under pinning the automation of our businesses processes instead of being a challenge to our jobs or even our very existence or would it degenerate into being nothing but hype?