Preparing for a Machine Learning Engineer position? This article was written for you! Why?

Because we will build upon the Flask prototype and create a fully functional and scalable service. Specifically, we will be setting up a Deep Learning application served by uWSGI and Nginx. We will explore everything step by step: from how to start from a simple Flask application, wire up uWSGI to act as a full web server, and hide it behind Nginx (as a reverse proxy) to provide a more robust connection handling. All this will be done on top of the deep learning project we built so far that performs semantic segmentation on images using a custom Unet model and Tensorflow.

Up until this moment on the series, we have taken a colab notebook, converted to a highly optimized project with unit tests and performance enhancements, trained the model in Google cloud, and developed a Flask prototype so it can be served to users.

We will enhance upon this Flask prototype and build a fully functional and scalable service.

What is uWSGI?

According to the official website:

uWSGI is an application server that aims to provide a full stack for developing and deploying web applications and services.

Like most application servers, it is language-agnostic but its most popular use is for serving Python applications. uWSGI is built on top of the WSGI spec and it communicates with other servers over a low-level protocol called uwsgi.

Ok this is very confusing and we need to clarify this up, so let’s start with some definitions.

-

WSGI (Web Server Gateway Interface): An interface specification that defines the communication between a web server and a web application. In simple terms, it tells us what methods should be implemented to pass requests and responses back and forth between the server and the application. It is basically an API interface that’s being used to standardize communication and it is part of Python’s standard library.

-

uWSGI: An application server that communicates with applications based on the WSGI spec and with other web servers over a variety of other protocols (such as HTTP). Most of the times it acts like a middleware because it translates requests from a conventional web server into a format that the application understands (WSGI)

-

uwsgi: a low-level/binary protocol that enables the communication between web servers. uwsgi is a wire protocol that has been implemented by the uWSGI server as its preferred way to speak with other web servers and uWSGI instances. In essence, it defines the format of the data sent between servers and instances.

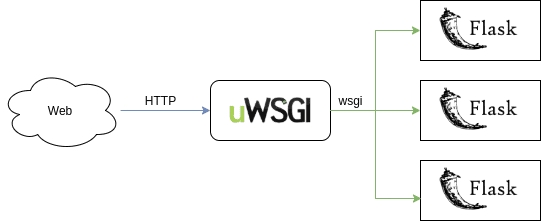

Let’s look at the whole picture now: We have our software exposed to a web app using Flask. This application will be served using the uWSGI server and it will communicate with it using the WSGI spec. Moreover, the uWSGI server now will be hidden behind another web server (in our case Nginx) with whom it will communicate using the uwsgi protocol. Does it make sense? Seriously I don’t know why they named all these with the same initials!

If I were you, I would still have two more questions.

Why do we need the uWSGI server in the first place (isn’t Flask enough?) and why do we need another web server such as Nginx in front of uWSGI?

And they’re both valid.

Why do we need uWSGI? Isn’t Flask adequate?

While Flask can act as an HTTP web server, it was not developed and optimized for security, scalability, and efficiency. It is rather a framework to build web applications as explained in the previous article. uWSGI on the other hand was created as a fully functional web server and it solves many problems out of the box that Flask doesn’t even touch.

Examples are:

-

Process management: it handles the creation and maintenance of multiple processes so we can have a concurrent app in one environment, and is able to scale to multiple users.

-

Clustering: it can be used in a cluster of instances

-

Load balancing: it balances the load of requests to different processes

-

Monitoring: it provides out of the box functionality to monitor the performance and resource utilization

-

Resource limiting: it can be configured to limit the CPU and memory usage up to a specific point

-

Configuration: it has a huge variety of configurable options that gives us full control over its execution

Having said that, let’s inspect our next tool: Nginx.

What is Nginx and why do we need it?

Nginx is a high-performance, highly scalable, and highly available web server (lots of highly here). It acts as a load balancer, a reverse proxy, and a caching mechanism. It can also be used to serve static files, to provide security and encryption on the requests, to rate-limit them and it supposedly can handle more than 10000 simultaneous connections (actually in my experience it’s not so much of an assumption). It is basically uWSGI on steroids.

Nginx is extremely popular and is a part of many big companies’ tech stack. So why should we use it in front of uWSGI? Well, the main reason is that we simply want the best of both worlds. We want those uWSGI features that are Python-specific but we also like all the extra functionalities Nginx provides. Ok if we don’t expect our application to be scaled to millions of users, it might be unnecessary but in this article series, this is our end goal. Plus it’s an incredible useful knowledge to have as a Machine Learning Engineer. No one expects us to be experts but knowing the fundamentals can’t really hurt us.

In this example, we’re going to use Nginx as a reverse proxy in front of uWSGI. A reverse proxy is simply a system that forwards all requests from the web to our web server and back. It is a single point of communication with the outer world and it comes with some incredibly useful features. First of all, it can balance the load of million requests and distribute the traffic evenly in many uWSGI instances. Secondly, it provides a level of security that can prevent attacks and uses encryption in communications. Last but not least, it can also cache content and responses resulting in faster performance.

I hope that you are convinced by now. But enough with the theory. I think it’s time to get our hands dirty and see how all these things can be configured in practice.

Set up a uWSGI server with Flask

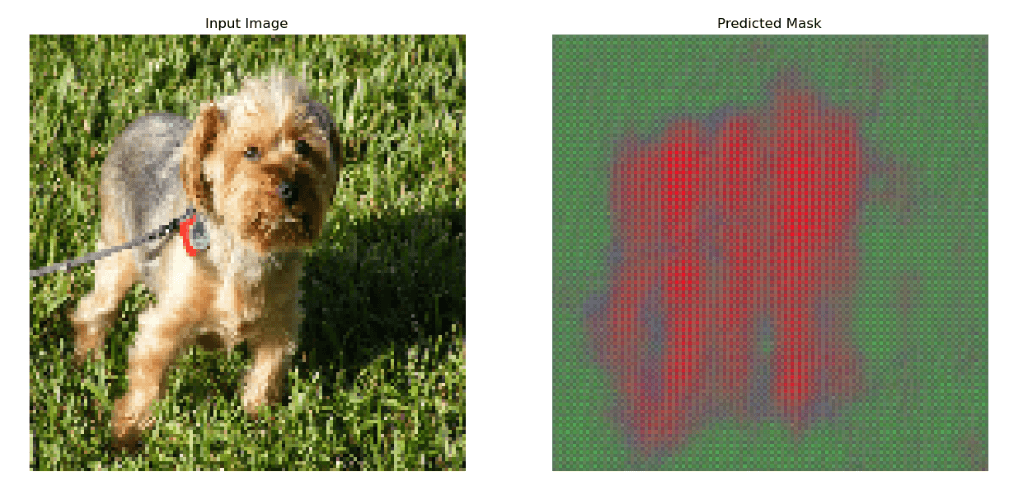

In the previous article, we developed a Flask application. It receives an image as a request, predicts its segmentation mask using the Tensorflow Unet model we built, and returns it to the client.

import os

import traceback

from flask import Flask, jsonify, request

from executor.unet_inferrer import UnetInferrer

app = Flask(__name__)

APP_ROOT = os.getenv('APP_ROOT', '/infer')

HOST = "0.0.0.0"

PORT_NUMBER = int(os.getenv('PORT_NUMBER', 8080))

u_net = UnetInferrer()

@app.route(APP_ROOT, methods=["POST"])

def infer():

data = request.json

image = data['image']

return u_net.infer(image)

@app.errorhandler(Exception)

def handle_exception(e):

return jsonify(stackTrace=traceback.format_exc())

if __name__ == '__main__':

app.run(host=HOST, port=PORT_NUMBER)

The above code will remain intact because, in order to utilize uWSGI, we just need to execute a few small steps on top of the Flask application.

After installing uWSGI with pip,

pip install uwsgi

we can simply spin up an instance with the following command:

uwsgi --http 0.0.0.0:8080 --wsgi-file service.py --callable app

This tells uWSGI to run a server in 0.0.0.0 and port 8080 using the application located in the service.py file, which is where our Flask code lives. We also need to provide a callable parameter (must be a function) that can be called using the WSGI spec. In our case, it is the Flask instance we created and bound all the routes to.

app = Flask(__name__)

When we press enter, a full uWSGI server will be spawned up and we can access it in our localhost.

It is seriously that easy!And of course, if we run the client script we built in the previous article, it will hit the uWSGI server instead and return the segmentation mask of our little Yorkshire terrier.

Tip: Note that instead of passing all the parameters using the command line, we can create a simple config file and make the server read directly from it.

And in fact, it is usually the preferred way, especially because we will later deploy the server in the cloud and it is much easier to change a config option than altering the terminal command.

A sample config file (app.ini) can look like this:

[uwsgi]

http = 0.0.0.0:8080

module = app.service

callable = app

die-on-term = true

chdir = /home/aisummer/src/soft_eng_for_dl/

virtualenv = /home/aisummer/miniconda3/envs/Deep-Learning-Production-Course/

processes = 1

master = false

vacuum = true

Here we define our “http” URL and “callable” as before and we use the “module” option to indicate where the python module with our app is located. Apart from that, we need to specify some other things to avoid misconfiguring the server such as the full directory path of the application( “chdir”) as well as the virtual environment path (if we use one).

Also notice that we don’t use multiprocessing here (process=1) and we have only a master process (more about processes on the docs). “die-on-term” is a handy option that enables us to kill the server from the terminal and “vacuum” dictates uWSGI to clean unused generated files periodically.

Configuring a uWSGI server is not straightforward and needs to be carefully examined because there are so many options and so many parameters to take into consideration. Here I will not analyze further all the different options and details but I suggest looking them up in the official docs. As always we will provide additional links in the end.

To execute the server, we can do:

uwsgi app.ini

Wire up Nginx as a reverse proxy

The next task in the todo list is to wire up the Nginx server which again is as simple as building a config file.

First, we install it using “apt-get install”

sudo apt-get install nginx

Next, we want to create the config file that has to live inside the “/etc/nginx/sites-available” directory (if you are in Linux) and it has to be named after our application.

sudo nano /etc/nginx/sites-available/service.conf

Then we create a very simple configuration file that contains only the absolute minimum to run the proxy. Again, to discover all the available configuration options, be sure to check out the official documentation.

server {

listen 80;

server_name 0.0.0.0;

location {

include uwsgi_params;

uwsgi_pass unix: /home/aisummer/src/soft_eng_for_dl/app/service.sock;

}

}

Here we tell Nginx to listen to the default port 80 for requests coming from the server located in 0.0.0.0 The location block is identifying all requests coming from the web to the uWSGI server by including “uwsgi_params” which specifies all the generic uWSGI parameters, and “uwsgi_pass” to forward them to the defined socket.

What socket you may wonder? We haven’t created a socket. That’s right. That’s why we need to declare the socket in our uWSGI config file by adding the following 2 lines:

socket = service.sock

chmod-socket = 660

This instructs the server to listen on the 660 socket. And remember that Nginx speaks with you uWSGI through the socket using the uwsgi protocol.

Socket: A web socket is a bidirectional secure connection that enables a two-way interactive communication session between a user and a client. That way we can send messages to a server and receive event-driven responses without having to poll the server for a reply.

Finally, we enable the above configuration by running the below command that links our config in the “sites-available” directory with the “sites-enabled” directory

sudo ln -s /etc/nginx/sites-available/service /etc/nginx/sites-enabled

And we initiate Nginx

sudo nginx -t

If everything went well, we should see our app in the localhost and we can use our client once again to verify that everything works as expected.

Conclusion

We used uWSGI to create a server from our Flask application and we hide the server behind Nginx reverse proxy to handle things like security and load balancing. As a result, we have officially a Deep Learning application that can be scaled to millions of users with no problem. And the best part is that it can be deployed exactly as it is into the cloud and be used by users right now. Or you can set up your own server in your basement but I will suggest not doing that.

Because of all the steps and optimization we did, we can be certain about the performance of our application. So, we don’t have to worry that much about things like latency, efficiency, security. And to prove that this is actually true in the next articles, we are going to deploy our Deep Learning app in Google cloud using Docker Containers and Kubernetes. Can’t wait to see you there.

As a side material, I strongly suggest the TensorFlow: Advanced Techniques Specialization course by deeplearning.ai hosted on Coursera, which will give you a foundational understanding on Tensorflow

Au revoir…

Resources

* Disclosure: Please note that some of the links above might be affiliate links, and at no additional cost to you, we will earn a commission if you decide to make a purchase after clicking through.