The brain is a great source of inspiration for Alexander Ororbia, an assistant professor of computer science and cognitive science at RIT.

By mimicking how neurons in the brain learn, Ororbia is working to make artificial intelligence (AI) more powerful and energy efficient. His research was recently published in the journal Science Advances.

RIT

RIT Assistant Professor Alexander Ororbia

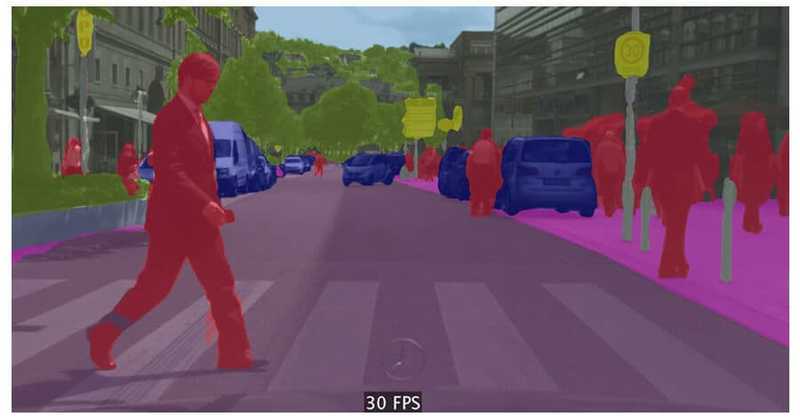

The research article, “Contrastive signal–dependent plasticity: Self-supervised learning in spiking neural circuits,” introduces an AI network architecture that processes information through spikes, much like the electrical signals that brain cells use. This new learning method allows AI to do self-supervised learning, which is faster and more adaptable.

Most of the deep neural networks that power modern day versions of generative AI and large language models use a learning approach called backpropagation of errors. This training method works well, but it often has to retrace a long communication pathway between inputs and outputs that is not time or energy efficient.

“The brain isn’t designed to do learning this way,” said Ororbia. “Things are sparser in the brain—more localized. When you look at an MRI, there are very few neurons going off at one time. If your brain worked like modern AI, and all or most of your neurons fired at the same time, you would simply die.”

Ororbia’s new method addresses inherent problems with back propagation. It lets the network learn without constant supervised feedback. The network improves its ability to classify and understand patterns by comparing fake data with real data.

The method also works in parallel, meaning different parts of the network can learn at the same time without waiting for each other. Studies have shown that spiking neural networks can be several orders of magnitude more power efficient than modern-day deep neural networks.

“The new method is like a prescription for how you specifically change the strengths—or plasticity—of the little synapses that connect spiking neurons in a structure,” said Ororbia. “It basically tells AI what to do when it has some data—increase the strength of synapses if you want a neuron to fire later or decrease it to be quiet.”

Ororbia said this new technology will be useful for edge computing. For example, having more energy efficient AI would be critical for extending the battery life of autonomous electric vehicles and robotic controllers.

Next, Ororbia is looking to show that his theories can be scaled and produce that energy efficiency in real life. He is working with Cory Merkel, assistant professor of computer engineering, to evaluate the method on powerful neuromorphic chips.

Ororbia is also director of the Neural Adaptive Computing Laboratory (NAC Lab) in RIT’s Golisano College of Computing and Information Sciences, where he is working with researchers to develop more applications for brain-inspired computing.