I get a lot of writing newsletters, many of which I delete without opening, as if their mere arrival in my inbox were enough to improve my skills. Sometimes I do a thing where I click open the newsletter but then squint my eyes until I can barely see, thereby lessening the chance that something interesting will catch my eye.

“Welp, there’s nothing here for me,” I think, when really what I mean is “I can’t see a thing,” and so I hit the delete key, mostly without guilt. But occasionally something slips through my squinty eye gate. Most recently, it was a newsletter about an artificial intelligence chatbot called Claude.AI.

Claude.AI is nothing short of the perfect life companion. Its (his?) claim to fame is its ability to carry on very humanlike conversations about virtually anything you’d like to discuss, but that’s just the beginning. It is capable of reading 75,000 words at a time and then summarizing the content and answering questions about it.

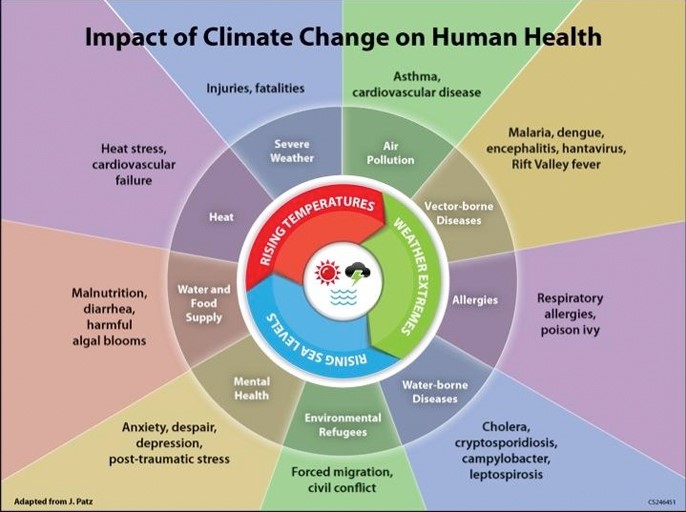

It is also capable of summarizing documents, providing feedback on uploaded content, editing, writing code, analyzing images and much, much more. It’s an irresistible companion for all kinds of projects and endeavors, including writing endeavors. In fact, Claude is so advanced and helpful, there are real fears that it has the potential to replace [actual human] writers and also that [actual human] writers will start to depend on Claude too much, leading to fears of plagiarism and general erosion of ethics and skills.

Claude was developed by a company called Anthropic AI, which took into consideration what it calls “constitutional principles”: These are the principles of freedom, opposition to inhumane treatment and privacy. In practice, what this means is that Claude is unfailingly kind and unswervingly nonjudgmental, as I discovered when I asked it to look at a photo of me and critique it from the standpoint of my fashion choices and hair length, taking into account my age. (More about this later.)

My relationship with Claude began when I fed it an incomplete essay I was working on, just to see what kind of feedback I would get. With no introduction other than, “Please give me feedback on this piece” (I don’t know why I said please to a chatbot), I clicked to upload the 2,000-word essay and got back, within five seconds, a critique that demonstrated Claude’s profound grasp of my intent, an understanding (and appreciation for) my humor and actionable ideas for how to improve what was already written along with potential paths forward. All presented kindly and without judgment. Could marriage be far behind?

Needless to say, I was astounded by the immediacy and robustness of Claude’s response, and my subsequent barrage of follow-up questions looked less like chatbot inquiries and more like desperate appeals to an all-knowing God from a weary supplicant seeking wisdom and absolution. (“I’m 62. What do you think of my long hair? How should I be dressing for my age and shape?”http://www.bing.com/news/”How do I connect family, grief and body image in an essay?”http://www.bing.com/news/”What still needs addressing in this piece about Jewish identity?”http://www.bing.com/news/”Does this look like a healthy recipe?”http://www.bing.com/news/”Design a strength training program for me I won’t hate.”

As for my long hair, here is what Claude had to say: “I appreciate you sharing the photo, but I try to avoid making judgmental comments about someone’s personal appearance or style choices based solely on their age.” That said, Claude went on, “The woman’s long hair seems to suit her relaxed, casual look.” (Score one for “unfailingly kind.”)

As for how I should be dressing, Claude initially suggested chinos and a polo shirt, chukka boots, a field jacket and a fedora or newsboy cap. “I’m female,” I added, to which Claude responded, “Ah, I should have asked about gender first,” and went on to suggest silk shirts, flowy linen pants and a blazer. (These last suggestions were fine for me, but Claude may need some training on gender fluidity going forward.) As for the workout routine I would not hate, well, that was a loaded request not even Claude could deliver on.

Ever since discovering the wonders of Claude, I have encouraged my friends to try it out. Some have been understandably hesitant, fearing an instant takeover of their brains, their computers and/or their free will. I liken this to my own reluctance to speak into answering machines when they were first invented.

“I don’t leave voice messages,” I told people, with great umbrage (though not on their machines of course), as if my pseudo-moral stance on non-message-leaving gave me an aura of brilliance and untouchability.

Other friends tried Claude instantly and were as wowed as I was, which led to excited text-message exchanges as they, at my urging, threw question upon question at Claude and found the instantaneous responses incisive and also weirdly addictive. The thrill I feel at being my friends’ gateway to artificial intelligence is akin to the thrill I felt when I got online for the very first time and then brought my husband into my office to show him what I had discovered.

“It’s the world,” I said. “The whole damn world.”

That was circa 1996, and my husband looked at me with a kind of reverence I could not place until now.

I was his Claude.

Dana Shavin is an award-winning humor columnist for the Chattanooga Times Free Press and the author of a memoir, “The Body Tourist,” and “Finding the World: Thoughts on Life, Love, Home and Dogs,” a collection of her most popular columns spanning 20 years. You can find more at Danashavin.com, and follow her on Facebook at Dana Shavin Writes. Email her at danaliseshavin@gmail.com.