In part 1 of the Deep Learning in Production course, we defined the goal of this article-series which is to convert a python deep learning notebook into production-ready code that can be used to serve millions of users. Towards that end, we continue our series with a collection of best practices when programming a deep learning model. These practices mostly refer to how we can write organized, modularized, and extensible python code.

You can imagine that most of them aren’t exclusive for machine learning applications but they can be utilized on all sorts of python projects. But here we will see how we can apply them in deep learning using a hands-on approach (so brace yourselves for some programming).

One last thing before we start and something that I will probably repeat a lot on this course. Machine learning code is ordinary software and should always be treated as one. Therefore, as ordinary code it has a project structure, a documentation and design principles such as object-oriented programming.

Also, I will assume that you have already set up your laptop and environment as we talked about on part 1 (if you haven’t feel free to do that now and come back)

Project Structure

One very important aspect when writing code is how you structure your project. A good structure should obey the “Separation of concerns” principle in terms that each functionality should be a distinct component. In this way, it can be easily modified and extended without breaking other parts of the code. Moreover, it can also be reused in many places without the need to write duplicate code.

Tip: Writing the same code once is perfect, twice is kinda fine but thrice it’s not.

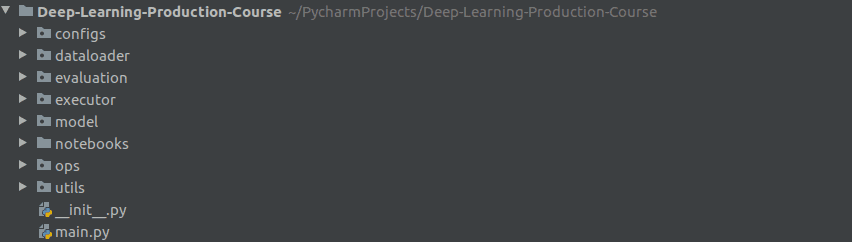

The way I like to organize most of my deep learning projects is something like this :

And that, of course, is my personal preference. Feel free to play around with this until you find what suits you best.

Python modules and packages

Notice that in the project structure that I presented, each folder is a separate module that can be imported in other modules just by doing “import module”. Here, we should make a quick distinction between what python calls module and what package. A module is simply a file containing Python code. A package, however, is like a directory that holds sub-packages and modules. In order for a package to be importable, it should contain a init.py file ( even if it’s empty). That’s not the case for modules. Thus, in our case each folder is a package and it contains an “init” file.

In our example, we have 8 different packages:

- configs: in configs we define every single thing that can be configurable and can be changed in the future. Good examples are training hyperparameters, folder paths, the model architecture, metrics, flags.

A very simple config is something like this:

CFG = {

"data": {

"path": "oxford_iiit_pet:3.*.*",

"image_size": 128,

"load_with_info": True

},

"train": {

"batch_size": 64,

"buffer_size": 1000,

"epoches": 20,

"val_subsplits": 5,

"optimizer": {

"type": "adam"

},

"metrics": ["accuracy"]

},

"model": {

"input": [128, 128, 3],

"up_stack": {

"layer_1": 512,

"layer_2": 256,

"layer_3": 128,

"layer_4": 64,

"kernels": 3

},

"output": 3

}

}

-

dataloader is quite self-explanatory. All the data loading and data preprocessing classes and functions live here.

-

evaluation is a collection of code that aims to evaluate the performance and accuracy of our model.

-

executor: in this folder, we usually have all the functions and scripts that train the model or use it to predict something in different environments. And by different environments I mean: executors for GPUs, executors for distributed systems. This package is our connection with the outer world and it’s what our “main.py” will use.

-

model contains the actual deep learning code (we talk about tensorflow, pytorch etc)

-

notebooks include all of our jupyter/colab notebooks in one place.

-

ops: this one is not always needed, as it includes operations not related with machine learning such as algebraic transformations, image manipulation techniques or maybe graph operations.

-

utils: utilities functions that are used in more than one places and everything that don’t fall in on the above come here.

Now that we have our project well structured and all, we can begin to see how our code should look like on a lower level.

Object-oriented programming in Python

The answers to that question is classes and everything that comes with this. Admittedly, Object-Oriented Programming (OOP) might not be the first thing that comes to mind when writing python code (it definitely is when coding in Java), but you will be surprised on how easy it is to develop software when thinking in objects.

Tip: A good way to code your way is to try to write python the same way you would write java (yup I know that’s not your usual advice, but you’ll understand what I mean in time)

If we reprogram our Unet model in an object-oriented way, the result would be something like this:

class UNet():

def __init__(self, config):

self.base_model = tf.keras.applications.MobileNetV2( input_shape=self.config.model.input, include_top=False)

self.batch_size = self.config.train.batch_size

. . .

def load_data(self):

"""Loads and Preprocess data """

self.dataset, self.info = DataLoader().load_data(self.config.data)

self._preprocess_data()

def _preprocess_data(self):

. . .

def _set_training_parameters(self):

. . .

def _normalize(self, input_image, input_mask):

. . .

def _load_image_train(self, datapoint):

. . .

def _load_image_test(self, datapoint):

. . .

def build(self):

""" Builds the Keras model based """

layer_names = [

'block_1_expand_relu',

'block_3_expand_relu',

'block_6_expand_relu',

'block_13_expand_relu',

'block_16_project',

]

layers = [self.base_model.get_layer(name).output for name in layer_names]

. . .

self.model = tf.keras.Model(inputs=inputs, outputs=x)

def train(self):

. . .

def evaluate(self):

. . .

Remember the code in the colab notebook. Now look at this again. Do you start to understand what these practises accomplish?

Basically, you can see that the model is a class, each separate functionality is encapsulated within a method and all the common variables are declared as instance variables.

As you can easily realize, it becomes much easier to alter the training functionality of our model, or to change the layers or to flip the default of a flag. On the other hand, when writing spaghetti code (which is a programming slang for chaotic code) , it is much more difficult to find what does what, if a change affects other parts of the code and how to debug the code.

As a result, we get pretty much for free maintainability, extensibility and simplicity (always remember our principles).

Abstraction and Inheritance

However, using classes gives us a lot more than that. Abstraction and inheritance are two of the most important topics on OOP.

Using abstraction we can declare the desired functionalities without dealing with how they are going to be implemented. To this end, we can first think about the logic behind our code and then diving into programming every single part of it. The same pattern can easily be adopted in deep learning code. To better understand what I’m saying, have a look in the code below:

class BaseModel(ABC):

"""Abstract Model class that is inherited to all models"""

def __init__(self, cfg):

self.config = Config.from_json(cfg)

@abstractmethod

def load_data(self):

pass

@abstractmethod

def build(self):

pass

@abstractmethod

def train(self):

pass

@abstractmethod

def evaluate(self):

pass

One can easily observe that the functions have no body. They are just a declaration. In the same logic, you can think of every functionality you are gonna need, declare it as an abstract function or class and you are done. It’s like having a contract of what the code should look like. That way you can decide first on the high level implementation and then tackle each part in detail.

Consequently, that contract can now be used by other classes that will “extend” our abstract class. This is called inheritance. The base class will be inherited in the “child” class and it will immediately define the structure of the child class. So, the new class is obligated to have all the abstract functions as well. Of course, it can also have many other functions, not declared in the abstract class.

Look below how we pass the BaseModel class as an argument in the UNet. That’s all we need. Also in our case, we need to call the init function of the parent class, which we accomplish with the “super()”. “super” is a special python function that calls the constructor ( the function that initializes the object aka the init) of the parent class). The rest of the code is normal deep learning code

The main way to have abstraction in Python is by using the ABC library.

class UNet(BaseModel):

def __init__(self, config):

super().__init__(config)

self.base_model = tf.keras.applications.MobileNetV2(input_shape=self.config.model.input, include_top=False)

. . .

def load_data(self):

self.dataset, self.info = DataLoader().load_data(self.config.data )

self._preprocess_data()

. . .

def build(self):

. . .

self.model = tf.keras.Model(inputs=inputs, outputs=x)

def train(self):

self.model.compile(optimizer=self.config.train.optimizer.type,

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=self.config.train.metrics)

model_history = self.model.fit(self.train_dataset, epochs=self.epoches,

steps_per_epoch=self.steps_per_epoch,

validation_steps=self.validation_steps,

validation_data=self.test_dataset)

return model_history.history['loss'], model_history.history['val_loss']

def evaluate(self):

predictions = []

for image, mask in self.dataset.take(1):

predictions.append(self.model.predict(image))

return predictions

Moreover, you can imagine that the base class can be inherited by many children ( that’s called polymorphism). That way you can have many models with the same base model as a parent but with different logic. In another practical aspect, if a new developer joins your team, he can easily find out what his model should look like just by inheriting our abstract class.

Static and class methods

Static and class methods are another two cool patterns that can simplify and make our code less error-prone. In OOP we have the class and the instances. The class is like the blueprint when creating objects and objects are the instances of a class.

Class methods take as arguments the class and they are usually used as constructors for creating new instances of the class.

Static methods are methods that refer only to the class, not its instances, and they will not modify the class state. Static methods are mostly used for utils functions that aren’t gonna change.

Let’s see how they can be applied in our code:

Below we see how we can construct a config from a json file. The classmethod “from_json” returns an actual instance of the Config class created from a json file by just calling “Config.from_json( json_file )” . In other words, it’s a constructor of our class. By doing this, we can have multiple constructors for the same class ( we might for example want to create a config using a yaml file).

class Config:

"""Config class which contains data, train and model hyperparameters"""

def __init__(self, data, train, model):

self.data = data

self.train = train

self.model = model

@classmethod

def from_json(cls, cfg):

"""Creates config from json"""

params = json.loads(json.dumps(cfg), object_hook=HelperObject)

return cls(params.data, params.train, params.model)

Static methods on the other hand are methods that are called on the actual object and not some instance of it. A perfect example in our case is the DataLoader class, where we simply want to load the data from a url. Is there a reason to have a new DataLoader instance? Not really, because everything is stable in this functionality and nothing will ever change. When states are changing, it is better to use an instance and instance methods.

class DataLoader:

"""Data Loader class"""

@staticmethod

def load_data(data_config):

"""Loads dataset from path"""

return tfds.load(data_config.path, with_info=data_config.load_with_info)

Type Checking

Another cool and useful feature (borrowed from Java) is type checking. Type checking is the process of verifying and enforcing the constraints of types. And by types we mean whether a variable is a string, an integer or an object. To be more precise, in python we have type hints. Python doesn’t support type checking, because it is a dynamically typed language. But we will see how to get around that. Type checking is essential because it can acknowledge bugs and errors very early and can help you write better code overall.

It is very common when coding in Python to have moments where you wonder if that variable is a string or an integer. And you find yourself tracking it throughout the code trying to figure out what type it is. And it’s much more tricky to do when that code is using Tensorflow or Pytorch.

A very simple way to do type checking is this:

def ai_summer_func(x:int) -> int:

return x+5

As you can see we declare that both x and the result need to be type of integer.

Note that this will not throw an error on an exception. It is just a suggestion. IDEs like Pycharm will automatically discover them and show a warning. That way you can easily detect bugs and fix them as you are building your code.

If you want to catch these kinds of errors, you can use a static type checker like Pytype. After installing it and including your type hints in your code you can run something like this and it will show you all the type errors in your code. Pytype is used in many tensorflow official codebases and it’s a Google library. An example can be illustrated below:

$ pytype main.py

One important thing that I need to mention here is that checking types in Tensorflow code is not easy. I don’t want to get into many details but you can’t simply define the type of x as a “tf.Tensor”. Type checking is great for simpler functions and basic data types but when it comes to tensorflow code things can be hard. Pytype has the ability to infer some types from your code and it can resolve types such as Tensor or Module but it doesn’t always work as expected.

For completeness linting tools such as Pylint can be great to find type errors from your IDE. Also check this out. It’s a code style checker specifically designed for Tensorflow code.

Documentation

Documenting our code is the single most important thing in this list and the thing that most of us are guilty of not doing. Writing simple comments on our code can make the life of our teammates but also of our future selves much much easier. It is even more important when we write deep learning code because of the complex nature of our software. In the same sense, it’s equally important to give proper and descriptive names in our classes, functions and variables. Take a look at this:

def n(self, ii, im):

ii = tf.cast(ii, tf.float32) / 255.0

im -= 1

return ii, im

I’m 100% certain that you have no idea what it does.

Now look at this:

def _normalize(self, input_image, input_mask):

""" Normalise input image

Args:

input_image (tf.image): The input image

input_mask (int): The image mask

Returns:

input_image (tf.image): The normalized input image

input_mask (int): The new image mask

"""

input_image = tf.cast(input_image, tf.float32) / 255.0

input_mask -= 1

return input_image, input_mask

Can it BE more descriptive? (in Chandler’s voice)

The comments you can see above are called docstrings and are python’s way to document the responsibility of a piece of code. What I like to do is to include a docstring in the beginning of a file/module, indicating the purpose of a file, under every class declaration and inside every function.

There are many ways to format the docstrings. I personally prefer the google style which looks like the above code.

The first line always indicates what the code does and it is the only real essential part (I personally try to always include this in my code). The other parts explain what arguments the function accepts (with types and descriptions) and what it returns. These can sometimes be ignored if they don’t provide much value.

Combine them all together

All the aforementioned best practises can be combined into the same project, resulting in a highly extensible and easily maintainable code. Have a look at our repo in Github to see the final result. The code provided in the repo is fully functional and can be used as a template in your own projects.

In this article, we explore some best practices that you can follow when programming deep learning applications. We saw how to structure our project, how object oriented programming can make our code highly maintainable and extensible and how to document your code in order to avoid future headaches.

But of course that’s not all we can do. In the next article, we will dive deep into unit testing. We will see how to write unit tests for tensorflow code, how to use mock objects to simplify your test cases and why test coverage is an imperative part of every piece of software that lives online. Finally, we will discuss some examples of which parts of your machine learning code it’s better to test and what tests you absolutely need to write.

I’m sure you don’t want to miss that. So stay tuned. Or even better subscribe to our newsletter and sleep well at night, knowing that the article will be delivered in your inbox as soon as it’s out.

Have a nice day. Or night..

References:

* Disclosure: Please note that some of the links above might be affiliate links, and at no additional cost to you, we will earn a commission if you decide to make a purchase after clicking through.